Imagine a world where thoughts alone could bridge the gap for those silenced by severe paralysis. It’s not science fiction anymore. Recent advancements in neuroscience have brought us closer to that reality, with researchers successfully translating silent inner monologues into readable text. This development, centered on brain-computer interface technology, promises to transform lives for individuals with conditions like ALS or brainstem strokes. But how does it all work? Let’s dive in.

Understanding Inner Speech: The Silent Voice Inside Our Heads

We all do it, that quiet chatter in our minds. You know, replaying conversations, planning the day, or just musing over random thoughts. Inner speech, as experts call it, is essentially the imagination of speaking without moving a muscle or making a sound. It’s like hearing your voice narrate life, but entirely internal.

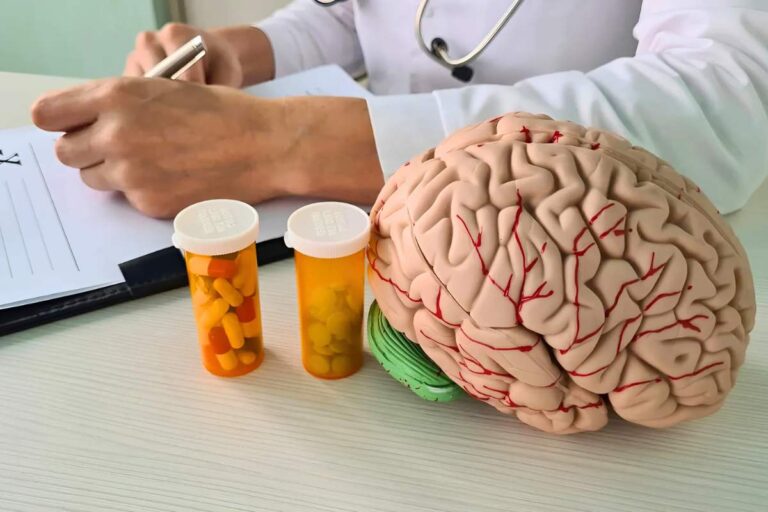

Scientists have long been fascinated by this phenomenon. Believe it or not, inner speech isn’t just idle daydreaming; it’s rooted in the brain’s motor cortex, the same area that controls actual speaking. Studies show it activates similar neural patterns to spoken words, though often with a softer intensity. For people with severe motor impairments, tapping into this could mean communicating without the exhaustion of trying to form sounds.

Think about it. Conditions like amyotrophic lateral sclerosis (ALS) or brainstem strokes rob individuals of muscle control, including speech. Traditional aids, such as eye-tracking devices, help, but they’re slow and cumbersome. Inner speech decoding? That could change everything, making interactions faster and more natural.

The Groundbreaking Study: From Brain Waves to Readable Words

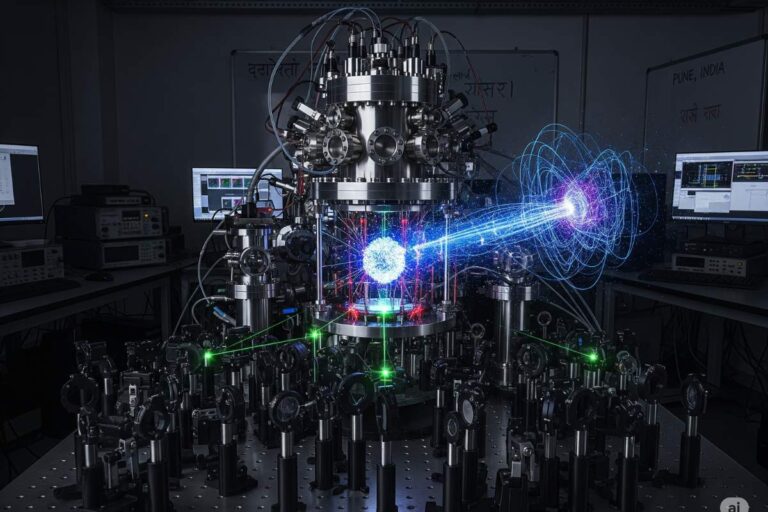

Here’s the exciting part. A team of researchers, led by experts at Stanford University, has made a significant leap. They implanted tiny microelectrodes into the motor cortex of four participants, all dealing with profound paralysis from ALS or strokes. These folks couldn’t speak audibly, but their brains were buzzing with activity.

The setup was straightforward yet ingenious. Participants were asked to either attempt speaking words aloud (as best they could) or simply imagine saying them. The electrodes captured the neural signals in real time. What did they find? Attempted speech and inner speech light up overlapping brain regions. Similar patterns emerge, but inner speech shows a milder activation level. Fascinating, right?

Using this data, the team trained artificial intelligence models to interpret those imagined words. And get this: the system decoded sentences from a massive vocabulary, up to 125,000 words, with accuracy hitting as high as 74%. That’s not perfect, but it’s a proof-of-concept that inner speech can be “read” by machines.

One participant, for instance, imagined counting shapes on a screen. The brain-computer interface picked up unspoken numbers like “one, two, three” without missing a beat. It’s early days, but results like these highlight the potential for real-world application.

How Brain-Computer Interfaces Capture the Mind’s Whisper

Let’s break it down a bit more. Brain-computer interfaces, or BCIs, aren’t new; they’ve been around for over a decade, helping with everything from moving robotic arms to typing via thought. But decoding inner speech? That’s a fresh frontier.

The process starts with implantation. Microelectrodes, finer than a hair, are placed in the brain’s motor areas. These sensors detect electrical activity as neurons fire. For speech, it’s all about patterns: the brain rehearses movements even when you’re just thinking about talking.

In this study, AI algorithms analyzed those patterns. They learned to distinguish inner speech from other thoughts, translating them into text. Accuracy varied, sometimes spotting words with pinpoint precision, other times needing more context. But the system even handled untrained elements, like numbers, during a counting task.

Funny thing is, inner speech isn’t identical to attempted speech. The signals are close, yet distinct enough for the AI to tell them apart. This could let users switch modes: ignore inner thoughts when privacy matters, or activate decoding on demand.

Addressing Privacy: A Password to Unlock Thoughts

Privacy, though. That’s the elephant in the room when we’re talking mind-reading tech. What if the device eavesdrops on unintended thoughts? The researchers thought ahead.

They built in a safeguard: a password mechanism. Users think a specific phrase, say, something quirky like “chitty chitty bang bang”, to unlock decoding. The system recognized this keyword with over 98% accuracy in tests. Once activated, it translates inner speech; otherwise, it stays dormant.

This isn’t foolproof, mind you. In experiments, the BCI sometimes caught stray thoughts, like silent counting during visual tasks. It raises questions about mental privacy in an era of advancing neurotech. Strategies are emerging, from better algorithms to ethical guidelines, ensuring users control what gets shared.

Experts emphasize the need for protection. As BCIs evolve, so must the frameworks guarding our inner worlds. It’s a balance: empowering communication without invading personal space.

The Broader Context: Evolution of BCIs and Their Impact on Paralysis

Step back for a moment. Brain-computer interfaces have come a long way since their inception. Early versions focused on movement, decoding signals to control cursors or prosthetics. Then came speech attempts: systems that interpret muscle twitches for typing, even if the words come out garbled.

But inner speech decoding skips the physical effort. For someone with ALS, where muscles waste away, this could mean chatting at near-normal speeds without fatigue. Brainstem strokes, which paralyze facial muscles, leave thoughts intact but trapped. Unlocking them? Life-changing.

Research from places like Emory University and Georgia Tech echoes this. They’ve explored how inner speech engages the brain differently, sometimes involving areas tied to the theory of mind, our ability to imagine others’ perspectives. It’s not just about words; it’s simulating conversations internally.

Globally, millions face speech loss from neurological issues. In the U.S. alone, ALS affects about 30,000 people, with strokes adding thousands more. BCIs offer hope, but access remains limited, implants are invasive, costly, and require expertise.

Challenges and Limitations: Why It’s Not Ready for Prime Time Yet

Of course, it’s not all smooth sailing. Current accuracy tops at 74% for structured tasks, but free-form thoughts? Errors creep in. The vocabulary is impressive, yet real conversations are messy; idioms, slang, and emotions all complicate things.

Implants carry risks: infections, device failures. Not everyone qualifies; brain health varies. And training the AI takes time, participants in the study underwent sessions to fine-tune the system.

Then there’s the tech itself. More sensors could boost precision, but that means more invasive procedures. Algorithms need refining to handle diverse accents, languages, and even dialects. Right now, it’s English-focused, but expansions are on the horizon.

Critics point out: What about non-verbal thinkers? Not everyone experiences vivid inner speech. Some rely on visuals or abstract concepts. BCIs must adapt to varied cognitive styles.

Real-Life Stories: Voices from the Participants

Picture this. One participant, battling ALS, imagined simple sentences. The screen lit up with decoded words, matching his thoughts. “It’s liberating,” he might say, if he could. Instead, the BCI speaks for him.

Another, post-stroke, tallied shapes silently. The system echoed his count, proving it catches unsolicited inner talk. These aren’t just data points; they’re glimpses into restored autonomy.

Researchers share anecdotes, too. “We saw the brain light up differently for inner speech,” one noted. It’s raw, human, reminding us tech serves people, not the other way around.

The Science Behind It: Neural Substrates and AI Integration

Dig deeper into the brain science. Inner speech draws from the motor cortex, but also temporal regions for voice simulation. Studies show right-hemisphere involvement when mimicking dialogues, adding layers to solo monologues.

AI plays the starring role here. Machine learning models sift through neural noise, mapping signals to phonemes, then words. It’s like teaching a computer to eavesdrop on thoughts, ethically, of course.

Conjunction analyses in related research link this to theory-of-mind networks: medial prefrontal cortex, posterior cingulate. Generating internal chats isn’t mere repetition; it’s creative, social, even in solitude.

Phenomenologically, inner speech varies. Some hear full sentences; others, fragments. Developmentally, it stems from childhood; Vygotsky theorized it as internalized social speech, evolving from overt talk to silent reflection.

In atypical populations, like those with aphasia, inner speech might persist even when external speech fails. Neuropsychological evidence supports its role in working memory, self-regulation.

Future Prospects: Toward Fluent, Natural Communication

Looking ahead? The future’s bright, if researchers like Frank Willett are right. “Speech BCIs could one day restore communication as fluent as conversational speech,” he says.

Advancements might include non-invasive options, EEG caps over implants, though less precise. Hybrid systems could blend inner speech with eye-tracking for hybrid input.

Imagine applications beyond paralysis: aiding aphasia recovery, enhancing augmented reality. Or in education, helping those with dyslexia rehearse reading internally.

But hurdles remain. Ethical debates on mental privacy intensify. Who owns decoded thoughts? Regulations must evolve alongside tech.

Funding’s key too. Government grants, private investments, think Neuralink’s buzz, drive progress. Collaborations across Stanford, Caltech, and others accelerate discoveries.

In trials, the second patients are already testing refined devices. Accuracy climbs; speeds quicken. We’re on the cusp.

Expanding Horizons: Inner Speech in Cognitive Science

Let’s zoom out. Inner speech isn’t isolated; it’s woven into cognition. Psychologists link it to problem-solving: that “aha!” moment often bubbles up in self-talk.

Developmentally, kids transition from aloud egocentric speech to inner versions around age 7. It aids planning, emotional control.

Phenomenology-wise, it’s subjective. Some experience it auditorily; others, kinesthetically, feeling the words form. Varieties include dialogic (conversing with imagined others) versus monologic (solo narration).

Research from PubMed Central highlights neural overlaps: left inferior frontal gyrus for generation, right temporal areas for voice attributes. Functional MRI shows precuneus activation in complex scenarios.

For atypical groups, schizophrenia patients sometimes report intrusive inner voices. BCIs could offer therapeutic insights, distinguishing the self from others.

Neuropsychology adds: lesions disrupting inner speech impair verbal memory, hinting at its functional core.

Implications for Society: Equity, Access, and Ethical Frontiers

Society-wise, this tech could level the playing fields. Marginalized by disability? Not anymore. But equity matters: who gets access? High costs might exclude low-income groups.

Globally, translations into other languages expand reach. In India, for instance, with rising stroke rates, localized BCIs could transform care.

Ethically, consent’s crucial. Participants in studies undergo rigorous screening. Privacy strategies, like the password lock, set precedents.

Here’s the kicker: as BCIs decode more, they might reveal subconscious biases or hidden emotions. Safeguards against misuse, in legal, corporate settings, are vital.

Wrapping Up the Inner Dialogue

We’ve covered a lot: from neural basics to futuristic visions. This breakthrough in decoding inner speech marks a pivotal moment in neuroscience. For paralysis patients, it’s a beacon of hope, restoring voices long silenced.

Challenges persist, sure. But progress is undeniable. As tech refines, expect more stories of reclaimed communication. It’s not just about words; it’s about connection. And that, if I’m honest, is what makes this so profoundly human.