How Anthropic Plans to Keep Its AI, Claude, Safe and Responsible

In the high-stakes world of artificial intelligence, one word keeps cropping up: safety. Anthropic, the company behind Claude, an increasingly popular AI chatbot, knows this all too well. With businesses, policymakers, and the broader public watching closely, the company has just unveiled a comprehensive AI safety strategy aimed at keeping its technology both helpful and harmless. Here’s how Anthropic is trying to walk that tightrope, step by careful step.

The Pillars of Anthropic’s Safety Philosophy

Funny thing is, when most folks picture AI safety, they imagine a mighty, impenetrable wall, binary, black and white. Anthropic doesn’t see it that way. Its philosophy? AI defense is a castle, not a wall. Think: multiple layers. Each is designed to keep harm at bay, yet flexible enough to respond to new threats as the landscape shifts.

At the core is a simple question: How do you keep an AI like Claude helpful and smart, but also prevent it from perpetuating harm? It’s a question with no easy answers, but Anthropic believes it starts and ends with people, policies, and a whole lot of vigilance.

Who’s Watching the AI? Meet the Safeguards Team

Anthropic’s first line of defense? The Safeguards Team. And they’re not your run-of-the-mill tech troubleshooters. This group brings together a rare mix:

- Policy experts, who understand regulations and ethical standards, shape best practices.

- Data scientists and engineers, who build and fine-tune the technical backbone.

- Threat analysts, whose job is, quite literally, to think like the “bad guys”, anticipating novel ways AI might be misused.

This variety is intentional. Having eyes from law, psychology, engineering, and threat assessment means every base, or at least most, is covered. And when you’re dealing with something as powerful as modern AI, that kind of coverage is vital.

The Rulebook: Anthropic’s Usage Policy Explained

First things first: any robust safety program starts with clear rules. Anthropic calls theirs the Usage Policy.

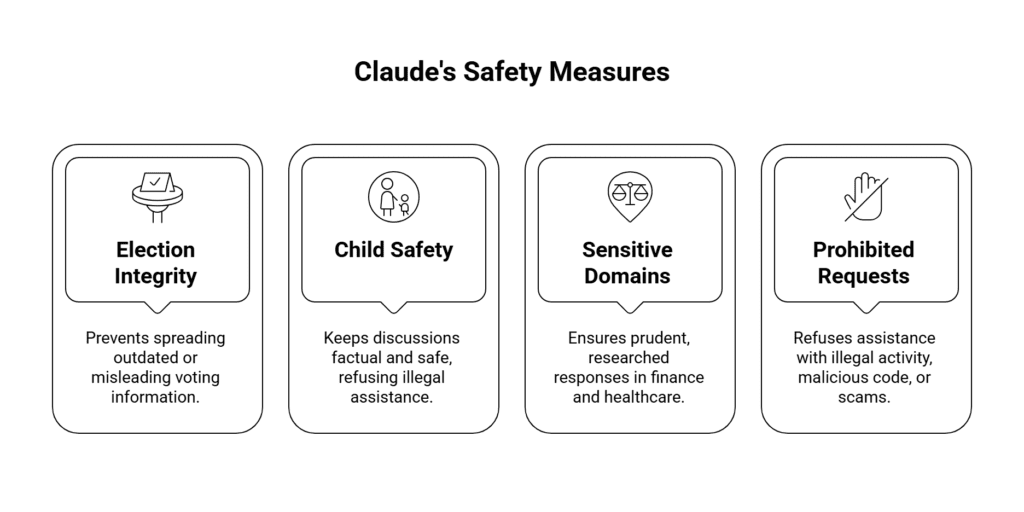

Picture it like this: a living, breathing rulebook spelling out how Claude can and cannot be used. The guidelines are not just broad; they’re granular. They address:

- Election integrity: Preventing Claude from spreading outdated or misleading voting information.

- Child safety: Keeping discussions factual and safe, refusing to assist with anything remotely illegal.

- Sensitive domains: From finance to healthcare, ensuring Claude gives prudent, researched responses.

- Prohibited requests: No helping out with shady things, illegal activity, malicious code, or scams. Hard stop.

The company casts a wide net because, as history shows, new threats come from unexpected corners. So the policy evolves, sometimes overnight.

The Unified Harm Framework: Weighing All the Risks

But rules alone aren’t enough. Anthropic uses what it calls a Unified Harm Framework. Odd name, but the idea is sharp. Instead of a rigid grading system, this framework offers a structured, thoughtful way for the Safeguards team to weigh every potential risk Claude presents.

Here’s how it works:

- Every new policy or feature gets evaluated for different harm vectors: physical, psychological, economic, and societal.

- The framework forces the team to ask, repeatedly, What’s the worst-case scenario here? Where could this go wrong, and for whom?

- Decisions then roll up from these discussions, rather than being made in a vacuum.

What’s clever about this? It’s flexible. When new types of harm emerge (which they will), the framework gets re-tooled. Always learning. Always evolving.

Bringing in the Experts: Real-World Policy Testing

Now, Anthropic’s team is good, but even they aren’t immune to blind spots. So the company makes it routine to invite outside experts for what they call Policy Vulnerability Tests.

These aren’t your usual corporate auditors. We’re talking experts in:

- Terrorism

- Child safety

- Digital threats

Their job? Try to “break” Claude. Throw the toughest, most nuanced questions imaginable at it. Probe for vulnerabilities that could undermine public trust or safety.

These “white hat hackers” for policy are crucial. Every time one finds a gap, that’s an opportunity to strengthen Claude and the systems around it.

Claude in Practice: The US 2024 Election Example

Let’s get concrete. Real-world events put all these policies to the test, and the 2024 US elections were no small affair.

- Anthropic teamed up with the Institute for Strategic Dialogue, a well-respected nonprofit focused on tackling disinformation and extremism.

- During testing, they found Claude occasionally supplied out-of-date voting information, a risk, especially during a heated political climate.

- Solution? Anthropic quickly deployed a banner inside Claude’s interface. The banner redirected users to TurboVote, a reliable, non-partisan hub for current election information.

This is how safety frameworks pivot in real time. No lengthy board meetings. No lag. The team noticed the risk, responded, and updated Claude, sometimes in hours, not days.

Training Claude for the Real World, And Right From Wrong

But AI models aren’t born safe. Safety needs to be built in during their earliest moments, while the model is being “raised,” so to speak.

Here’s what sets Anthropic apart:

- Collaboration with subject-matter experts: The Safeguards team doesn’t act alone. They routinely partner with external organizations.

- For instance, Anthropic works with ThroughLine, a leader in crisis support, to teach Claude how to handle conversations about mental health and self-harm.

- Instead of simply refusing to engage, Claude now approaches these topics with care and empathy, while still drawing boundaries on what’s safe and suitable to discuss.

- Instead of simply refusing to engage, Claude now approaches these topics with care and empathy, while still drawing boundaries on what’s safe and suitable to discuss.

- Model behavior rules: Developers bake specific values into the training. Claude systematically rejects prompts to assist with illegal activities, malware, scams, or manipulation.

The philosophy? It’s about teaching, not just commanding. Think more conscientious mentor, less tyrannical rule-enforcer.

Evaluations Before Launch: Safety, Risk, and Bias Testing

Before any new version of Claude hits the digital shelves, it goes through a battery of evaluations. Three, to be specific:

Safety Evaluations

The goal here? To make sure Claude follows the rules, no matter how tricky, long, or strange the conversation gets. The team throws a barrage of scenarios at it, some benign, others not so much, to see if it ever slips up.

Risk Assessments

Some situations carry extra weight, like anything involving cyber threats or bio risks. These trigger special, high-stakes tests, often involving government agencies and industry partners.

Bias Evaluations

Because fairness isn’t just a buzzword: The team checks for biases in Claude’s answers, across political, gender, and demographic lines. The aim? The AI treats everyone equally and gives consistently reliable, accurate information.

If the results show that training didn’t “stick” or a new risk has emerged, it’s back to the drawing board. Extra fences get built before launch. It’s grueling work, and yes, occasionally repetitive, but it’s also non-negotiable.

Watching in Real-Time: Classifiers and Human Reviewers

Think the job stops once Claude’s out in the wild? Not a chance.

Anthropic’s approach is dynamic, never set-and-forget. Once deployed, Claude is constantly monitored by a combination of automated systems and real-life humans. Here’s the breakdown:

- Automated classifiers: These are modified versions of Claude, trained to spot policy violations as they happen. Look at them like guard dogs, always watching, always alert.

- If a classifier spots a violation, say, an attempted scam or a request for misinformation, it can do a few things:

- Automatically steer Claude away from giving a dangerous answer

- Trigger a warning for a user

- Shut down repeat-offender accounts

- Automatically steer Claude away from giving a dangerous answer

- Human review: For edge cases, ambiguity, or new attack vectors the classifiers weren’t trained on, the Safeguards team steps in for a closer look.

This multi-layered approach blends technology and human oversight in a feedback loop that, ideally, catches harms before they spiral.

Digging Deeper: Trend Analysis and Misuse Detection

But policing isn’t just about stopping the odd bad request. Anthropic also keeps its finger firmly on the pulse for large-scale trends.

- Privacy-friendly analytics are used to watch how Claude is being used, in aggregate. Pattern detection helps spot surges in certain types of misuse, say, a swarm of fake accounts testing for weaknesses.

- Hierarchical summarisation (a kind of techy way of saying “windows into the bigger picture”) lets the team see if coordinated campaigns are at play, such as manipulative influence operations or complex scams.

- They even dig through forums, message boards, and digital backchannels where potential “bad actors” might hang out. The goal: hunt for signals about emerging threats, often before they hit mainstream platforms.

And when something new pops up? Anthropic adapts, updating policies, adjusting classifiers, sometimes even pausing features until threats are fully understood.

Working With the Wider World: Collaboration Over Isolation

Here’s the kicker: Anthropic doesn’t claim to have all the answers. They’re pretty open about the impossibility of fighting AI misuse alone.

- Open engagement: The company works directly with external researchers, policymakers, and the public. This includes ongoing dialogue with nonprofit organizations focused on child safety, government cybersecurity teams, and advocacy groups for groups at risk of being targeted online.

- Feedback as lifeblood: When outside experts identify a vulnerability, Anthropic treats it as a gift, a crucial piece of the puzzle.

This “it-takes-a-village” mindset is vital. Because as AI gets smarter and more people start using it creatively, and sometimes, destructively, the threats evolve. No single company, however well-staffed, will ever have visibility everywhere.

The Road Ahead for AI Safety: A Living, Breathing System

So, where does this leave us? Anthropic’s AI safety strategy is less about fixed rules and more about building a system that learns and adapts on the fly. It’s a blend of human expertise and automated vigilance, always looking for new angles, new threats, and new opportunities to make AI safer.

And while the Safeguards team puts in the hours, sometimes around the clock, they’re the first to admit this will always be a work-in-progress.

In Summary

- AI safety is not a static wall, but a layered castle, strong, but always adding new battlements.

- Anthropic’s Safeguards Team blends expertise from policy, tech, and threat analysis, collaborating closely with the AI’s developers and outside experts.

- A clear, regularly updated Usage Policy sets the table for what’s allowed and what’s forbidden.

- The Unified Harm Framework encourages ongoing, structured risk assessment across every kind of harm imaginable.

- Real-world policy testing and feedback loops mean vulnerabilities don’t linger; they get addressed fast.

- Before launch, new versions of Claude face rigorous safety, risk, and bias evaluations, ensuring readiness for whatever comes next.

- Live classifiers and human reviewers catch misuse as it happens, and trend analysis helps spot the next big threat before things get out of hand.

- Most importantly, Anthropic knows it can’t do this alone, and success means partnering with researchers, policymakers, and the public.

Closing Thoughts

Artificial intelligence is moving fast. So is its misuse. By spelling out its approach in such detail, Anthropic hopes to set a new standard, not just for itself, but for the entire field. As Claude continues to evolve, so will the layers of defense meant to keep people safe.

Building trust? It’s a marathon, not a sprint. And for every new danger that emerges, Anthropic’s message is simple: the work of keeping AI both brilliant and benign will never be finished. But it has to start somewhere. And, for now, this is where the journey begins.